IBM's Unrivaled Legacy of Innovation

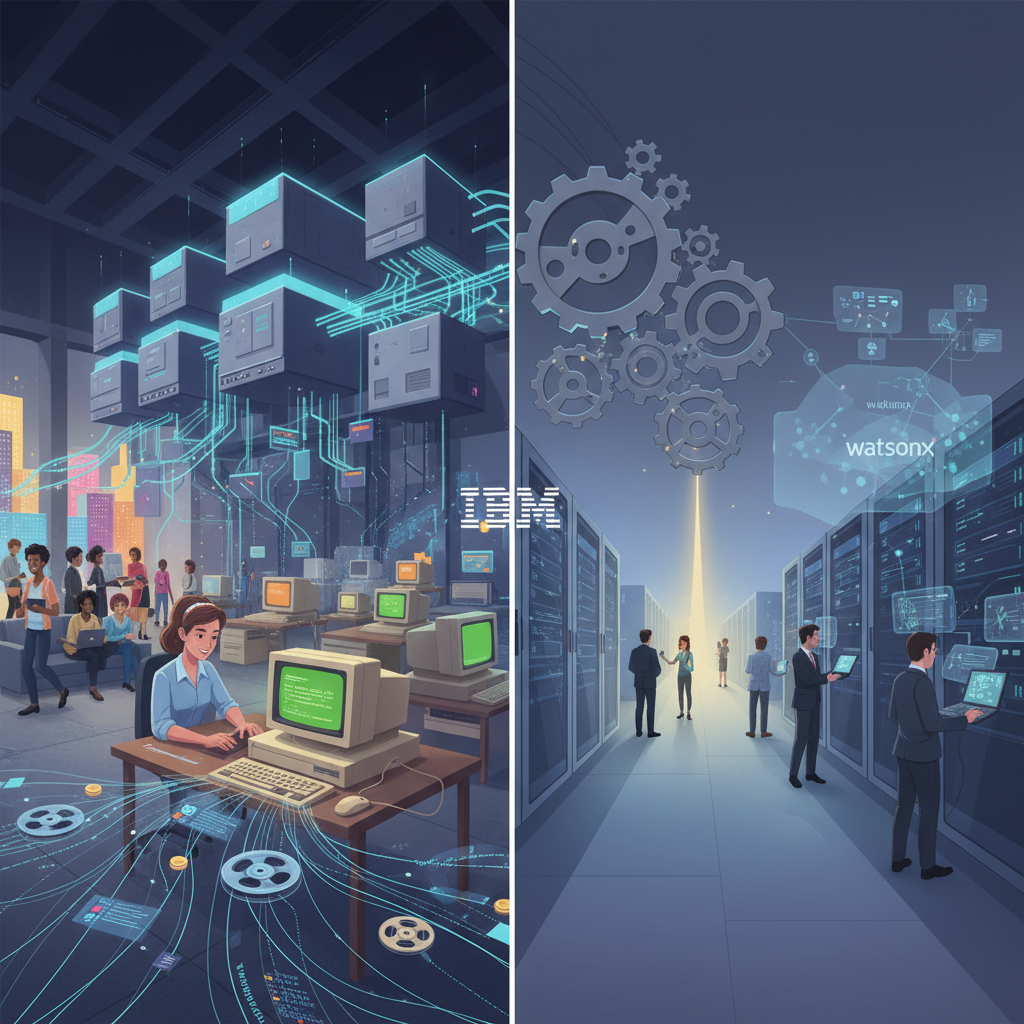

IBM is presented as a foundational pioneer in computing, responsible for numerous innovations that shaped the digital world. Their influence spans from early computing to modern advancements.

Early Computing

IBM electrified early computing with tabulating machines in the 1930s and introduced the first commercial computer, the IBM 701, in 1952. These foundational steps laid the groundwork for the digital age.

Mainframe Dominance

The IBM System/360, launched in 1964, established a standardized computing architecture that went on to dominate enterprise IT for decades. Its modular design and upward compatibility were revolutionary.

Key Innovations

IBM's contributions extended to various fields:

- Programming Languages: Developed FORTRAN (1957) and played a crucial role in SQL.

- Storage: Introduced the hard disk drive (1956) and the floppy disk, revolutionizing data storage.

- Data Management: Pioneered the relational database concept in the 1970s, which is fundamental to modern data systems.

- Everyday Technologies: Responsible for innovations like the magnetic stripe card, the Universal Product Code (UPC) barcode, Dynamic Random-Access Memory (DRAM), and the Automated Teller Machine (ATM).

Personal Computer Revolution

IBM launched the IBM Personal Computer (PC) in 1981, a move that democratized computing and initiated the PC revolution, forever changing the landscape of personal technology.

Continued Innovation

Even today, IBM continues to push boundaries in artificial intelligence (IBM Watson), cloud computing, and quantum computing, consistently securing a high volume of U.S. patents year after year.

The Retreat: Ceding Ground in Personal Computing and Consumer Tech

Despite its profound contributions, IBM gradually relinquished leadership in crucial sectors, particularly consumer-facing technology. This strategic shift marked a turning point in its trajectory.

IBM PC Strategic Misstep

- Embraced an open architecture and outsourced key components.

- Licensed DOS from Microsoft, allowing Microsoft to license it to other manufacturers.

- This led to a proliferation of "IBM-compatible" clones, eroding IBM's market share significantly.

- By outsourcing the operating system and processor, IBM ceded crucial control of the PC ecosystem.

Corporate Culture

A reluctance within IBM to "cannibalize" its highly profitable mainframe business with cheaper PC solutions contributed significantly to its PC decline, a classic innovator's dilemma.

Exit from PC Hardware

Ultimately, IBM sold its personal computer division to Lenovo in 2005, marking its complete withdrawal from the PC hardware market it helped create.

Missed Consumer Market

IBM's steadfast enterprise focus meant it largely missed the burgeoning consumer technology market, allowing companies like Apple, Microsoft, and Google to dominate these new frontiers.

Mass-Market Adoption

The company struggled to capitalize on new technologies like internet search platforms and consumer-facing AI, allowing other players to lead in mass adoption and market penetration.

The Enterprise Software Paradox: Continued Presence, Shifting Leadership

IBM's role in enterprise software presents a nuanced picture of continued presence amidst shifting leadership dynamics.

Historical Strength

The System/360, and its successors, provided unparalleled enterprise IT infrastructure, creating a robust ecosystem for businesses worldwide.

Strategic Shift

IBM strategically invested in and acquired numerous software companies, including Lotus, Tivoli, and Cognos, culminating in the significant acquisition of Red Hat in 2019, bolstering its hybrid cloud capabilities.

Current Status

Software now accounts for over 40% of IBM's annual revenue, making it a formidable force in hybrid cloud and enterprise AI (with platforms like watsonx and IBM Z). It became the industry's top middleware producer.

Challenges

- Struggled to adapt to networked Unix machines and the internet in the late 1980s/1990s, diminishing mainframe exclusivity.

- Shifted to services, but profitability was often low, impacting overall financial health.

- Spun off its managed IT infrastructure business into Kyndryl in 2020 as enterprises increasingly moved to cloud-native solutions.

- Experienced slower growth in core cloud software (hybrid cloud unit/Red Hat) compared to agile rivals like Amazon and Microsoft.

Criticisms

Organizational bureaucracy and insufficient investment in cloud infrastructure hindered IBM's agility and competitive edge. As a result, IBM's enterprise leadership is no longer unchallenged.

The "Why": Strategic Missteps, Short-Term Focus, and Organizational Inertia

The decline in IBM's leadership in certain sectors can be attributed to a complex interplay of strategic errors, short-term financial focus, and internal organizational challenges.

Prioritization of Short-Term Services Revenue

The shift to a services-led model, while preventing financial losses, may have "compromised IBM's concentration on the technological innovation that established its reputation." Services offer consistent revenue but potentially lower margins and divert focus from long-term, disruptive research and development.

Licensing Away Key Innovations

The MS-DOS licensing for the IBM PC empowered competitors and inadvertently created the very market that eventually marginalized IBM. Later attempts to regain control with proprietary architectures (MCA, OS/2) were largely rejected by an industry that had embraced open standards.

Retreat from Mass Adoption Platforms

An enterprise-first mindset led to the overlooking of the burgeoning consumer market, the internet search boom, and a slow adaptation to cloud infrastructure, allowing new giants to dominate these critical paradigms. Poor management decisions, such as underestimating Microsoft's intentions during the OS/2 collaboration, exacerbated these issues.

Organizational Inertia and Bureaucracy

IBM's immense size and established processes hindered its rapid response to technological changes. An internal reluctance to embrace cheaper PC solutions that might "cannibalize" profitable mainframe revenue represented a classic innovator's dilemma, ultimately costing them market share.

A Legacy Redefined

IBM's story is a compelling case study in technological leadership dynamics. It demonstrably "invented the future" multiple times but subsequently "abandoned" leadership in certain areas due to a combination of strategic outsourcing, a focus on short-term revenue, and significant organizational challenges.

Current Standing

Despite these shifts, IBM remains a formidable force in enterprise IT, particularly in hybrid cloud and artificial intelligence, continuing to shape the technological landscape.

Lesson

The profound lesson from IBM's journey is that inventing the future is distinct from maintaining leadership in it amidst relentless innovation and constant market shifts. Constant adaptation is key.

Conclusion

IBM's legacy is one of unparalleled innovation, serving as a stark illustration of how even technological pioneers can struggle to maintain control over the very futures they helped create. Its ongoing evolution continues to offer valuable insights into the dynamics of the tech industry.