AITB International Conference, 2019

Kathmandu, Nepal

My Youtube Channel

Please Subscribe

Flag of Nepal

Built in OpenGL

World Covid-19 Data Visualization

Choropleth map

Word Cloud in Python

With masked image

Tuesday, January 17, 2023

Solved | E11000 duplicate key error index in mongodb mongoose

Follow the given steps in order to solve the problem:

1. Use MongoDB compass for ease.

2. Open the "collection" in which you are facing a problem.

3. Delete all the indexes that were showing errors through MongoDB compass.

4. This should solve your problem.

If the error persists then either delete the collection whose attributes were showing errors or delete an entire "collections" of that particular database and create new ones.

You can also follow the steps shown in the video below:

Wednesday, December 7, 2022

N-grams for text processing

N-gram is an N sequence of words. It can be a unigram (one word), bigram (sequence of two words), trigram (sequence of three words), and so on. It focuses on a sequence of words. Such a method is very useful in speech recognition and predicting input text. It helps us to predict the next words that could occur in a given sequence. Search engines also use the n-gram technique to predict the next word while a search query is typed in a search bar.

Let us consider a sentence:

“This is going to be an amazing experience.”

Unigram for the above sentence can be written as:

- "This"

- "is"

- "going"

- "to"

- "be"

- "an"

- "amazing"

- "experience"

Bigram for the above sentence can be written as:

- “This is”

- “is going”

- “going to”

- "to be"

- "be an"

- "an amazing"

- "amazing experience"

- "This is going"

- "is going to "

- "going to be"

- "to be an"

- "be an amazing"

- "an amazing experience"

With n-grams, we can use a bag of n-grams (for example, a bag of bigrams) instead of only using a bag of words. A bag of bigrams or trigrams is more powerful than using just a bag of words as it takes the context into consideration as well.

Tuesday, December 6, 2022

Word embedding techniques for text processing

It is difficult to perform analysis on the text so we use word embedding techniques to convert the texts into numerical representation. This is also called vectorization of the words. It is a representation technique for text in which words having same meaning are given similar representation. It helps to extract features from the text.

i. Bag of Words (BoW)

It is one of the most widely used technique for word embedding. This method focuses on the frequency of the words. It is easy to implement and also efficient at the same time. In Python, we can use CountVectorizer() function from sklearn library to implement Bag of Words. A graphical representation of bag of Words has been shown in the figure below.

The reason for selecting BoW as word embedding technique is that it is still widely used technique due to its simplicity in implementation. It is also very useful when working on a domain specific dataset. If not entirely, it does give some idea to the researchers regarding the performance of the work.

Fig: Representation of BoW

ii. TF-IDF (Term Frequency- Inverse Document Frequency)

Term Frequency is used to count the number of words in a document. It is not necessary that the words with higher frequencies tend to represent significant information about the document. A word appearing in a document for many times does not mean it is relevant and significant all the time. Many times, words with less frequencies carry more significant meanings about the document. One way to normalize the frequency of words is to use TF-IDF. Inverse Document Frequency (IDF) is used to calculate the significance of rare words or words with less frequencies.

The reason for using TF-IDF is it can be used to find and remove stop words in the textual data. It helps to find unique identifier in a text. It helps to understand the importance of a words in entire document which in turn can help in text summarization.

Easy understanding of Confusion matrix with examples

Confusion matrix is an important metric to evaluate the performance of classifier. The performance of a classifier depends on their capability predict the class correctly against new or unseen data. It is one of the easiest metrics for finding the correctness and accuracy of the model. The confusion matrix in itself is not a performance measure but all the performance metrics are based on confusion matrix. The ideal situation for any classification model would be when FP=0 and FN=0 but that’s not the case in real life. Depending upon the situation, we might want to minimize either FP or FN.

Fig 3.10 Confusion Matrix

Let us consider both the situations with the help of example.

Case 1: Minimizing FN

Let,

1 = person having cancer

0 = person not having cancer

In this case, having some False Positive (FP) cases might be okay because classifying a non-cancerous person as cancerous does not affect a lot because on further test we will anyway find out that the particular person does not have cancer. But having False Negative (FN) cases can be hazardous because classifying a cancerous person as non-cancerous can cause serious threat to the life of that person. So, in this case we need to minimize FN.

Case 2: Minimizing FP

Let,

1 = Email is a spam

0 = Email is not spam

In this situation, having False Positive (FP) cases i.e., wrongly classifying non-spam or important email as spam can cause serious damage to the business, financial loss to the individuals and so on. Thus, in this situation we need to minimize FP.

Various metrics based on confusion matrix

Accuracy: It is the number of correct predictions made by the model over all kinds of predictions made. It is a good measure when the target variable classes in the data are nearly balanced.

Accuracy = (TP+TN)/(TP+FP+TN+FN)

Precision: It is defined as the ratio of number of positive samples correctly classified as positive to the total number of samples classified as positive (either correctly or incorrectly). It reflects how reliable the model is in classifying the samples as positive. It is used if the problem is sensitive to classifying a sample as positive in general.

Precision = TP/(TP+FP)

Recall or sensitivity: It is defined as the ratio of number of positive samples correctly classified as positive to the total number of actual positive samples. It measures the model’s ability to detect positive samples. Higher the recall value, more positive samples are detected. It is independent of how the negative samples are classified. It is used if the goal is to detect all the positive samples without caring whether the negative samples would be misclassified as positive.

Recall = TP/(TP+FN)

Topic Modeling: Working of LSA (Latent Semantic Analysis) in simple terms

LSA (Latent Semantic Analysis) is another technique used for topic modeling. The main concept behind topic modeling is that the meaning behind any document is based on some latent variables so we use various topic modeling techniques to unravel those hidden variables i.e., topics so that we can make sense of the given document. LSA is mostly suitable for large sets of documents. It converts the documents into a document term matrix before actually deriving topics from the documents.

Working of LSA:

The given text is converted into the document-term matrix using either bag of words or the Term Frequency- Inverse Document Frequency.

Then, using Truncated Singular Value Decomposition (SVD). It is at this stage the topics within the documents are identified. Mathematically, it can be given as,

Though it may look difficult to understand at first glance, in simple terms what the above formula represents is that it simply decomposes a high dimensional matrix into smaller matrices i.e., u, s, and v, where,

A = n*m document-term matrix (n = no. of documents and m = no. of words)

U = n*r document-topic matrix (n = no. of documents and r = no. of topics)

S = r*r matrix (r = no. of topics)

V = m*r word-topic matrix (m = no. of words and r = no. of topics)

Finally, we can now classify which document belongs to which topics.

Schematic diagram of LSA algorithm

Topic Modeling: Working of LDA (Latent Dirichlet Allocation) in simple terms

Topic modeling is an unsupervised method used to perform text analysis. When we are given large sets of unlabeled documents, it is very difficult to get an insight into the discussions upon which the documents are based upon. Here comes the role of topic modeling. It helps to identify a number of hidden topics within a set of documents. Based on those identified topics, the entire sets of documents can be classified. Also, it can be used to predict the topic of upcoming documents. It can have various applications like customer reviews, story genre prediction, tweets classification, and so on.

LDA (Latent Dirichlet Allocation) is one of the most used and well-known techniques to perform topic modeling.

The word latent means hidden because we don’t know what topics a set of documents contain. Based on the probability distribution of the occurrence of words in each document, they are allocated to defined topics.

Working of LDA:

What we want for LDA is to learn the topic mix in each document and also learn the word mix in each document.

We choose a random number of topics for the given dataset.

Assign each word in each given document to one of the defined topics, randomly.

Now, we go through each and every word and to which topic those words are assigned in each document. Then, it is analyzed how often the topic occurs in the document and how often the word occurs in the topic as a whole. Based on this analysis, the new topic is assigned to the given word.

It goes through a number of such iterations and finally, the topic will start making sense. We can analyze those found topics and assign a suitable name to those topics which best describe them.

Fig: Schematic diagram of LDA algorithm

Bi-LSTM in simple words

In traditional neural networks, inputs and outputs were independent of each other. To predict next word in a sentence, it is difficult for such model to give correct output, as previous words are required to be remembered to predict the next word. For example, to predict the ending of a movie, it depends on how much one has already watched the movie and what contexts have arrived to that point of time in the movie. In the same way, RNN remembers everything. It overcomes the shortcomings of traditional neural network with the help of hidden layers. Because of the quality to remember the previous inputs, it useful in prediction of time series. This is called Long Short-Term Memory (LSTM).

Fig: LSTM Architecture

Combining two independent RNN together forms a Bi-LSTM (Bidirectional Long Short-Term Memory). It allows the network to have both forward and backward information. Bi-LSTM gives better result as it takes the context into consideration.

Example:

In NLP, to find the next word, it is not that we need only the previous word but also the upcoming word. As shown in the example above, to predict what comes after the word “Teddy”, we can’t be dependent only upon the previous word (which is “said” in this case) because it same in both the cases. Here, we need to consider the context as well. If we predict “Roosevelt” in the first case then it will be out of context as it will read as [He said, “Teddy Roosevelt are on sale!”]. So, Bi-LSTM is important to taken context into consideration.

Fig: Bi-LSTM Architecture

Topic Modeling

Topic modeling is an unsupervised technique as it is used to perform analysis on text data having no label attached to it. As the name suggests, it is used to discover a number of topics within the given sets of text like tweets, books, articles, and so on. Each topic consists of words where the order of the words does not matter. It performs automatic clustering of words that best describe a set of documents. It gives us insight into a number of issues or features users are talking about, as a group.

For example, let us say a company has launched the software in the market and receives a number of feedback regarding various product features within a specified time period. Now, rather than going through each review one by one, if we apply topic modeling, we will come to know how users have perceived the various features of the product very quickly. It is one of the essential techniques to perform text analysis on unstructured data. After performing topic modeling, we can even perform topic classification to predict under which topic the upcoming reviews fall. There are various techniques to perform topic modeling, among which LDA is considered to be the most effective one.

Challenges of Sentiment analysis

Lack of availability of enough domain-specific dataset

Multi-class classification of the data

Difficult to extract the context of the post made by the users

Handling of neutral posts

Analyzing the posts of multiple languages

Friday, March 18, 2022

Sunday, February 20, 2022

Saturday, June 26, 2021

Friday, June 25, 2021

Saturday, June 19, 2021

Wednesday, June 9, 2021

Sunday, June 6, 2021

Saturday, June 5, 2021

Friday, June 4, 2021

Wednesday, June 2, 2021

Saturday, November 28, 2020

Visualizing Decision tree in Python (with codes included)

List of libraries required to be installed (if not already installed). Here installation is done through Jupyter Notebook. For terminal use only "pip install library-name".

#import sys

#!{sys.executable} -m pip install numpy

#!{sys.executable} -m pip install pandas

#!{sys.executable} -m pip install sklearn

#!{sys.executable} -m pip install hvplot

#!{sys.executable} -m pip install six

#!{sys.executable} -m pip install pydotplus

#!{sys.executable} -m pip install python-graphviz

Importing libraries:

import numpy as np

import pandas as pd

from sklearn.tree import DecisionTreeClassifier

from sklearn import tree

import hvplot.pandas

About the dataset

Imagine that you are a medical researcher compiling data for a study. You have collected data about a set of patients, all of whom suffered from the same illness. During their course of treatment, each patient responded to one of 5 medications, Drug A, Drug B, Drug c, Drug x and y.

Part of your job is to build a model to find out which drug might be appropriate for a future patient with the same illness. The feature sets of this dataset are Age, Sex, Blood Pressure, and Cholesterol of patients, and the target is the drug that each patient responded to.

It is a sample of binary classifier, and you can use the training part of the dataset to build a decision tree, and then use it to predict the class of a unknown patient, or to prescribe it to a new patient.

Let's see the sample of dataset:

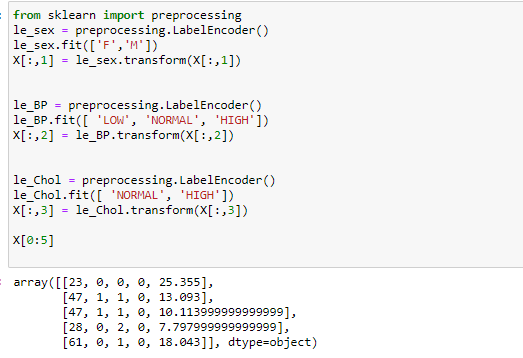

Pre-processing

Using my_data as the Drug.csv data read by pandas, declare the following variables:

- X as the Feature Matrix (data of my_data)

- y as the response vector (target)

- Remove the column containing the target name since it doesn't contain numeric values.

Now we can fill the target variable.

Setting up the Decision Tree

We will be using train/test split on our decision tree. Let's import train_test_split from sklearn.cross_validation.

from sklearn.model_selection import train_test_split

Now train_test_split will return 4 different parameters. We will name them:

X_trainset, X_testset, y_trainset, y_testset

The train_test_split will need the parameters:

X, y, test_size=0.3, and random_state=3.

The X and y are the arrays required before the split, the test_size represents the ratio of the testing dataset, and the random_state ensures that we obtain the same splits.

Modeling

We will first create an instance of the DecisionTreeClassifier called drugTree.

Inside of the classifier, specify criterion="entropy" so we can see the information gain of each node.

We can use "gini" criterion as well. Result will be the same. Gini is actually a default criteria for decision tree classifier.

From the graph below, we can see the accuracy score is highest at max-depth=4 and it remains constant

thereafter so we have used max-depth=4 in this case.

We can also plot max-depth vs accuracy score for both testset and trainset:

For testset:

For trainset:

Now, plotting the graph of max-depth vs accuracy score for both trainset and testset:

Visualization

Drawing Decision path (more readable form of decision tree):

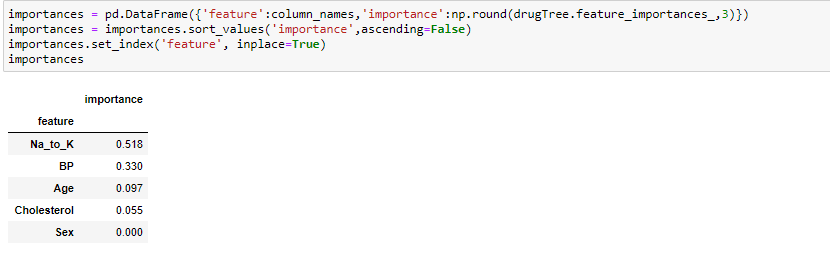

Important features for classification

The github link to the program can be found here.

Tuesday, November 10, 2020

Python code to extract Temporal Expression from a text (Using Regular Expression)

#Method 1

Code:

import re

print('Enter the text:')

text = input()

months='(Jan(?:uary)?|Feb(?:ruary)?|Mar(?:ch)?|Apr(?:il)?|May|Jun(?:e)?|Jul(?:y)?|Aug(?:ust)?|Sep(?:tember)?|Oct(?:ober)?|(Nov|Dec)(?:ember)?)'

re1=r'\w?((mor|eve)(?:ning)|after(?:noon)?|(mid)?night|today|tomorrow|(yester|every)(?:day))'

re2=r'\d?\w*(ago|after|before|now)'

re3=r'((\d{1,2}(st|nd|rd|th)\s?)?(%s\s?)(\d{1,2})?)' % months

re4=r'\d{1,2}\s?[a|p]m'

re5=r'(\d{1,2}(:\d{2})?\s?((hour|minute|second|hr|min|sec)(?:s)?))'

re6=r'(\d{1,2}/\d{1,2}/\d{4})|(\d{4}/\d{1,2}/\d{1,2})'

re7=r'(([0-1]?[0-9]|2[0-3]):[0-5][0-9])'

re8=r'\d{4}'

relist= [re1, re2, re3, re4, re5, re6, re7, re8]

print("\n\nTemporal expressions are listed below:\n")

for exp in relist:

match = re.findall(exp,text)

for x in match:

print(x)

Output:

Enter the text:

I get up in the morning at 6 am. I have been playing cricket since 2004. My birth date is 1997/9/8. It has been 2 hours since I am studying. The time right now is 12:45. He is coming today. I study till midnight everyday. It takes 2 mins to solve this problem. He is coming in January. He went abroad on 2nd March, 1999. He was working here 5 years ago.

Temporal expressions are listed below:

('morning', 'mor', '', '')

('today', '', '', '')

('midnight', '', 'mid', '')

('everyday', '', '', 'every')

now

ago

('January', '', '', 'January', 'January', '', '')

('2nd March', '2nd ', 'nd', 'March', 'March', '', '')

6 am

('2 hours', '', 'hours', 'hour')

('2 mins', '', 'mins', 'min')

('', '1997/9/8')

('12:45', '12')

2004

1997

1999#Method 2Code:import reprint('Enter the text:')

text = input()

text=list(text.split("."))

months='(Jan(?:uary)?|Feb(?:ruary)?|Mar(?:ch)?|Apr(?:il)?|May|Jun(?:e)?|Jul(?:y)?|Aug(?:ust)?|Sep(?:tember)?|Oct(?:ober)?|(Nov|Dec)(?:ember)?)'

re1=r'\w?((mor|eve)(?:ning)|after(?:noon)?|(mid)?night|today|tomorrow|(yester|every)(?:day))'

re2=r'\d?\w*(ago|after|before|now)'

re3=r'((\d{1,2}(st|nd|rd|th)\s?)?(%s\s?)(\d{1,2})?)' % months

re4=r'\d{1,2}\s?[a|p]m'

re5=r'(\d{1,2}(:\d{2})?\s?((hour|minute|second|hr|min|sec)(?:s)?))'

re6=r'(\d{1,2}/\d{1,2}/\d{4})|(\d{4}/\d{1,2}/\d{1,2})'

re7=r'(([0-1]?[0-9]|2[0-3]):[0-5][0-9])'

re8=r'\d{4}'

relist= [re1, re2, re3, re4, re5, re6, re7, re8]

re_compiled = re.compile("(%s|%s|%s|%s|%s|%s|%s|%s)" % (re1, re2, re3, re4, re5, re6, re7, re8))

print("\n\n Output(with temporal expression enclosed within square bracket:\n")

for s in text:

print (re.sub(re_compiled, r'[\1]', s))Output:Enter the text: I get up in the morning at 6 am. I have been playing cricket since 2004. My birth date is 1997/9/8. It has been 2 hours since I am studying. The time right now is 12:45. He is coming today. I study till midnight everyday. It takes 2 mins to solve this problem. He is coming in January. He went abroad on 2nd March, 1999. He was working here 5 years ago. Output(with temporal expression enclosed within square bracket: I get up in the [morning] at [6 am] I have been playing cricket since [2004] My birth date is [1997/9/8] It has been [2 hours] since I am studying The time right [now] is [12:45] He is coming [today] I study till [midnight] [everyday] It takes [2 mins] to solve this problem He is coming in [January] He went abroad on [2nd March], [1999] He was working here 5 years [ago]